Self-Hosting Dockerhub using Gitlab, Portainer, and unraid (kind of)

I hate writing posts like this where I got something to work, but not the way I wanted. I figured I’d detail my journey and eventual successful config.

The goals:

- Write a node app on my desktop (using WSL) to listen to Twitch events, and trigger a discord message, both using webhooks.

- Containerize that node app.

- Push the code and dockerfile to Gitlab.

- Use portainer to pull the image from Gitlab and host it on unraid.

1 & 2. Making the Notifier

I might do a separate post about writing a node app to work as a notifier, but it was pretty straightforward once I compromised how I wanted to do things. Then containerizing it was stupidly easy (node has a great walkthrough here). It basically came down to building the container: From the application’s folder,

docker build . -t alaskanbeard/twitch-notifier.

Then I was able to run it without issue,

docker run --env-file ./.env -p 3000:3000 -d alaskanbeard/twitch-notifier:latest (3000 is the default port so I just left it for testing).

3. Enabling Gitlab’s Container Registry

Getting things to work with Gitlab was a little more complicated. The first hurdle was getting the Gitlab container I’m using to have its registry enabled, which ended up being simple in the end, but it was a bit challenging to figure out since there’s conflicting information (and unraid makes things… unique). I tried a bunch of different configs, including updating my gitlab.rb, mapping the default port in my container template (unraid uses templates similar to docker compose, but much more user friendly), and manually adding registry_external_url as a variable in the template.

What I had to do in the end was pass registry_external_url 'http://ipaddress:port' to the --env GITLAB_OMNIBUS_CONFIG I was already passing through for my external_url. It looks like this now: --env GITLAB_OMNIBUS_CONFIG="external_url 'http://ipaddress:port';registry_external_url 'http://ipaddress:port'". That was the first hurdle, then I had to get Docker Desktop to push to my Gitlab instance, which was relatively easy after I learned to read.

3.5. Pushing to Gitlab from Docker Desktop

The only issue I had with pushing to Gitlab from WSL (via Docker Desktop) is that my Gitlab is using http instead of https, it throws an error about trying to connect to an insecure host on a secure (https) connection. The fix for traditional docker is to add to, or create a daemon.json with

{ "insecure-registries": [ "gitlabIP:port" ] }, which I did, and of course it didn’t work.

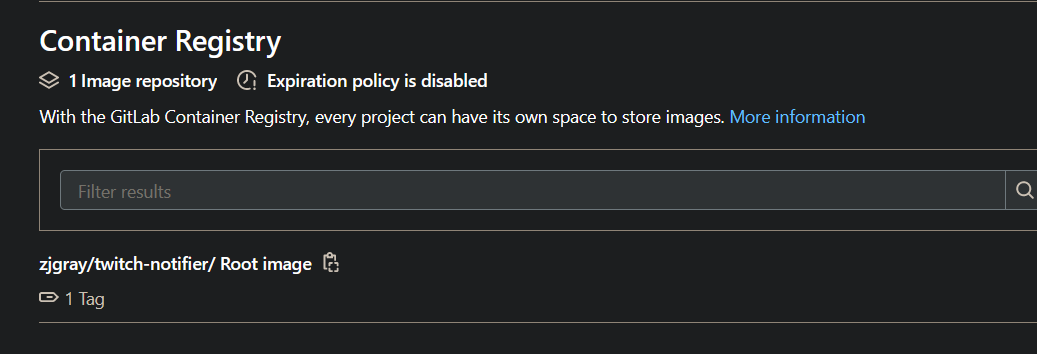

Turns out, if I’d just read the asterisk that said, “If you’re using Docker Desktop, do this” I’d have been set. It’s the same setting, but you add it in the GUI. Once that’s done it’s as simple as docker login gitlapIP:port -u myusername (and entering my password when prompted), docker build . -t gitlabIP:port/username/twitch-notifier and docker push gitlabIP:port/username/twitch-notifier. Then we get something like this :)

4. Pulling from Gitlab using Portainer

The first question I probably need to answer, is why I’m using Portainer when unraid has these templates. Well, the answer is simple, I’m too lazy to write a template, and I like getting experience with a different product that’s a little more real-world applicable.

This is the one that had me banging my head against a wall for a couple hours. By default, Portainer pulls from DockerHub, but you can also add custom registries, like an authenticated DockerHub. AWS, Gitlab, and custom (as well as a few others). Gitlab is probably piquing your interest, as it did mine. Process seemed simple, username and a privileged access token. No problem, I generate that and it won’t connect. Interesting, I say to myself, but then I notice a button that says “override default configuration”. I click it, and I see some default URLs which obviously won’t work for me. I set those to http://gitlabIP:port and http://gitlabregistryIP:port and figure I’m off to the races. Nope. Just doesn’t work.

Next I decided to add it as a custom registry. All that requires is username, password, and the registry URL. Easy peasy, I add it and it’s successful! Alright, I think, next step is to pull the image I want. I go to Images, change the source to my newly added Gitlab, and try to pull username/twitch-notifier. Error calling an insecure connection over https. Well, I’ve seen this one before, easy fix, right? Ha. If only.

I spent a while (like 90 minutes) researching how to do this and it seemed like I might be able to add a daemon.json to Portainer, but of course Portainer doesn’t have any kind of shell access. This was a new one for me, but apparently docker lets you dump the filesystem of a container (which is sick, by the way), then you can make changes and reimport it. It took me a while to settle on doing this, but that’s where I landed after extensive reading.

Dumping the filesystem is actually pretty easy, it was as simple as docker export -o /some/path containerID. After that I was able to poke around the file system where I was hoping to find some kind of docker config file, which of course there wasn’t one. I honestly don’t know if creating one would have worked, since it was at this point I decided to just do things correctly (like I should have back when I saw the insecure connection issues with Docker Desktop) and set up a registry domain and get an ssl cert for it.

But yeah, that was pretty much it (and a very anticlimactic post). I set up a new domain on my DNS forwarder, added it to Nginx Proxy Manager, and that was it. Portainer was able to connect without issue and pull the image. I created a docker-compose file for it, just for simplicity’s sake, but since the build isn’t doing much other than passing in a .env and a port it was only a couple lines.

The only thing I really need to do is re-register Docker Desktop with the new GItlab URL and fix my scuffed naming from Portainer’s docker-compose (it’s comically bad)